- Optimal Stopping Problems; One-Step-Look-Ahead Rule

- The Secretary Problem.

- Infinite Time Stopping

Category: Optimization

Algorithms for MDPs

- High level idea: Policy Improvement and Policy Evaluation.

- Value Iteration; Policy Iteration.

- Temporal Differences; Q-factors.

Infinite Time Horizon, MDP

- Positive Programming, Negative Programming & Discounted Programming.

- Optimality Conditions.

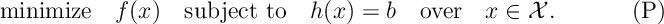

Lagrangian Optimization

We are interested in solving the constrained optimization problem

Talagrand’s Concentration Inequality

We prove a powerful inequality which provides very tight gaussian tail bounds “” for probabilities on product state spaces

. Talagrand’s Inequality has found lots of applications in probability and combinatorial optimization and, if one can apply it, it generally outperforms inequalities like Azzuma-Hoeffding.

Spitzer’s Lyapunov Ergodicity

We show that relative entropy decreases for continuous time Markov chains.

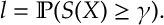

Cross Entropy Method

In the Cross Entropy Method, we wish to estimate the likelihood

Here is a random variable whose distribution is known and belongs to a parametrized family of densities

. Further

is often a solution to an optimization problem.

Online Convex Optimization

We consider the setting of sequentially optimizing the average of a sequence of functions, so called online convex optimization.

Gradient Descent

We consider one of the simplest iterative procedures for solving the (unconstrainted) optimization

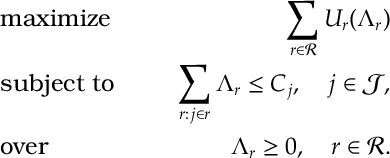

A Network Decomposition

We consider a decomposition of the following network utility optimization problem

SYS: