Q-learning is an algorithm, that contains many of the basic structures required for reinforcement learning and acts as the basis for many more sophisticated algorithms. The Q-learning algorithm can be seen as an (asynchronous) implementation of the Robbins-Monro procedure for finding fixed points. For this reason we will require results from Robbins-Monro when proving convergence.

A key ingredient is the notion of a -factor as described in Section [IDP]. Recall that optimal

-factor,

, is the value of starting in state

taking action

and thereafter following the optimal policy. In Infinite Time Horizon, Prop 2 we showed that this solved the recursion:

![\label{QL:FixedPoint} Q(x,a) = \mathbb E_{x,a} [ r(x,a) + \beta \max_{\hat{a}} Q(\hat{X},\hat{a}) )]\, .](https://appliedprobability.blog/wp-content/uploads/2019/01/3333f304cfcf0b908278f2feec330a54.png?w=840)

Def. [Q-learning] Given a state , an action

, its reward

and the next state

,

-learning performs the update

![Q(x,a) \xleftarrow[]{\alpha} r(x,a) + \beta \max_{a'\in \mathcal A} Q(\hat{x},a') - Q(x,a)](https://appliedprobability.blog/wp-content/uploads/2019/01/f4d330d452b789b8527d8507a8726dd0.png?w=840)

where positive (learning rate) parameter. Recall

means reset

with

such that

.

To implement this as an algorithm, we assume that we have a sequence of state-action-reward-next_state quadruplets and we apply the above update to each of the terms in this sequence.

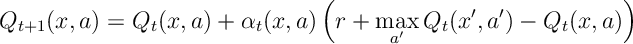

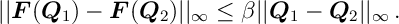

Thrm 1. For a sequence of state-action-reward triples Consider the Q-learning update for

if the sequence of state-action-reward triples visits each state and action infinitely often, and if the learning rate is an adapted sequence satisfying the Robbins-Monro condition

then, with probability ,

where is the optimal value function.

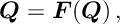

Proof. We essentially show that the result is a consequence of Theorem 3 in Robbins-Monro. We note that the optimal -function,

satisfies a fixed point equation

with

![]()

for each and

. We know from Prop 2 in the post Infinite Time Horizon, that for discounted programming

is a contraction. I.e.

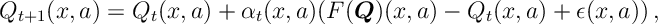

Now notice that the -learning algorithm performs the update

where![\epsilon(x,a) = r + \beta \max_{\hat{a}} Q(\hat{X},\hat{a}) - \mathbb E_{x,a} [ r(x,a) + \beta \max_{\hat{a}} Q(\hat{X},\hat{a}) ]](https://appliedprobability.blog/wp-content/uploads/2019/01/0975a5eff04a55410b76cce4d435d032.png?w=840)

for . The update above is a Robbin’s Monro update. Further Notice

remains the same for all other values of

, the update is asynchronous. It is not hard to see that when we condition on

the set of previous actions and states that

![\mathbb E [\epsilon_t(x_t,a_t) | \mathcal F_t] = 0](https://appliedprobability.blog/wp-content/uploads/2019/01/e69a9b2aebb5df9a6f04a1e7dbb19f97.png?w=840)

and, a quick calculation shows,1 that

![\mathbb E [\epsilon_t(x_t,a_t)^2 | \mathcal F_t] \leq 2 r^2_{\max} + 2 \beta^2 \max_{x,a} Q_t(x,a)^2 \, .](https://appliedprobability.blog/wp-content/uploads/2019/01/b51bcbb4acc88fec8f11ee01e5ee5b96.png?w=840)

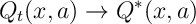

From this we see that we are working in the setting of Theorem 3 in Robbins-Monro and that the conditions of that theorem are satisfied. Thus it must be that

![Q_t(x,a) \xrightarrow[t\rightarrow\infty ]{} Q^*(x,a)](https://appliedprobability.blog/wp-content/uploads/2019/01/a7af11478b5aa8465637867f1c681212.png?w=840)

where satisfies

. In otherwords, as required, it satisfies the Bellman equation for the optimal

-function and thus is optimal.

Literature.

The argument here is depends heavily on the fixed point results. But the main source used is

Tsitsiklis, John N. “Asynchronous stochastic approximation and Q-learning.” Machine learning 16.3 (1994): 185-202.

- Note

↩