Thus far we have considered finite time Markov decision processes. We now want to solve MDPs of the form ![V(x) = \maxi_{\Pi \in {\mathcal P} } \quad R(x,\Pi) := \mathbb{E}_{x_0} \left[ \sum_{t=0}^{\infty} \beta^{t} r(X_t,\pi_t) \right] \, .](https://appliedprobability.blog/wp-content/uploads/2019/01/c0c1e66eb9b0cb64c2860e22d53a5d16.png?w=840)

We can generalize Bellman’s equation to infinite time, a correct guess at the form of the equation would, for instance, be

![V(x) = \max_{a\in {\mathcal A}} \Big\{ r(x,a) + \beta {\mathbb E}_{x,a} \left[ V(\hat{X}) \right] \Big\}, \qquad x\in \mathcal X\, .](https://appliedprobability.blog/wp-content/uploads/2019/01/341f5effe6bdeb3b2f06685f3e317fe6.png?w=840)

Previously we solved Markov Decisions Processes inductively with Bellman’s equation. In infinite time, we can not directly apply induction; however, we see that Bellman’s equation still holds and we can use this to solve our MDP. For now we will focus on the case of discounted programming: here we assume that

We will cover cases where later.

At this point it is useful define the concept of a -factor. A Q-factor of a policy

is the reward that arises when we take action

from state

and then follow policy

.

Def. [Q-Factor] The -factor of reward function

is the value for taking action

in state

![Q_R(x,a) = \mathbb E_{x,a} [ r(x,a) + \beta R(\hat{X}))]\, .](https://appliedprobability.blog/wp-content/uploads/2019/01/a06c6f99e4b4e441c743360b7ba15115.png?w=840)

Similarly the -factor for a policy

, denoted by

, is given by the above expression with

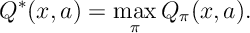

. The Q-factor of the optimal policy is given by

The following result shows that if we have solved the Bellman equation then the solution and its associated policy is optimal.

Thrm. For a discounted program, the optimal policy satisfies

![V(x) = \max_{a\in {\mathcal A}} \Big\{ r(x,a) + \beta {\mathbb E}_{x,a} \left[ V(\hat{X}) \right] \Big\}.](https://appliedprobability.blog/wp-content/uploads/2019/01/59eb3d8eddf1452495e360435d2c24fc.png?w=840)

Moreover, if we find a function such that

![R(x) = \max_{a\in {\mathcal A} } \left\{ r(x,a) + \beta \mathbb{E}_{x,a} \left[ R(\hat{X}) \right] \right\}](https://appliedprobability.blog/wp-content/uploads/2019/01/5aa0bdd68ed86cd780c71db98f7fe1c7.png?w=840)

then , i.e. the solution to the Bellman equation is unique, and we find a function

such that

![\pi(x) \in \argmax_{a\in \mathcal A} \left\{ r(x,a) + \beta \mathbb E_{x,a} \left[ R(\hat{X}) \right] \right\}](https://appliedprobability.blog/wp-content/uploads/2019/01/d8ed9227d9e769c75108f8e60278db31.png?w=840)

Then is optimal and

the optimal value function.

Proof. We know that Applying limits as

on both sides and bounded convergence theorem gives that

![\begin{aligned} R(x,\Pi) & = r(x,\pi_0) + \beta \mathbb E_{x,\pi_0} \left[ R(\hat{X},\hat{\Pi}) \right]\\ &\leq r(x,\pi_0) + \beta \mathbb E_{x,\pi_0} \left[ V(\hat{X}) \right]\,.\end{aligned}](https://appliedprobability.blog/wp-content/uploads/2019/01/b3b1fb86aaa12a0dfa2a242cf368a97b.png?w=840) For the inequality, above, we maximize

For the inequality, above, we maximize over

. Now maximizing the left hand side over

gives

![V(x) \leq \sup_{\pi_0 \in {\mathcal A}} \left\{ r(x,\pi_0) + \beta \mathbb{E}_{x,\pi_0} \left[ V(\hat{X}) \right] \right\}.](https://appliedprobability.blog/wp-content/uploads/2019/01/9613eff6520747960e79455a7537d4da.png?w=840)

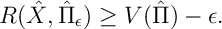

At this point we have that the Bellman equation but with an inequality. We need to prove the inequality in the other direction. For this, we let be the policy that chooses action

and then, from the next state

, follows a policy

which satisfies

We have that

![\begin{aligned} V(x) & \geq R(x,\pi_\epsilon) = r(x,a) + \beta \mathbb{E}_{x,a} \left[ R(\hat{X},\hat{\Pi}_\epsilon) \right] \\ & \geq r(x,a) + \beta \mathbb{E}_{x,a} \left[ V(\hat{X}) \right] - \epsilon \beta\end{aligned}](https://appliedprobability.blog/wp-content/uploads/2019/01/408cbd8586c6d8a219023f1370b25c9f.png?w=840)

The first inequality holds by the sub-optimality of and the second holds by the assumption on

. Maximizing over

, and taking

gives

![V(x) \geq \max_{a\in {\mathcal A}} \Big\{ r(x,a) + \beta {\mathbb E}_{x,a} \left[ V(\hat{X}) \right] \Big\}.](https://appliedprobability.blog/wp-content/uploads/2019/01/763b8252481ac8c7185b9b4f4a235d41.png?w=840)

Thus we now have that

![V(x) = \max_{a\in {\mathcal A}} \Big\{ r(x,a) + \beta {\mathbb E}_{x,a} \left[ V(\hat{X}) \right] \Big\}.](https://appliedprobability.blog/wp-content/uploads/2019/01/8a566a0dac74aeb3905cf8771edac849.png?w=840)

So at this point we know that the optimal value function satisfies the Bellman equation. For the next part of the result we need to show that the solution to this recursion is unique.

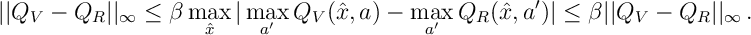

Suppose that is another solution to the Bellman equation. From the definition of a

-factor and the Bellman recursion,

and

. Thus note that

![Q_V(x,a) - Q_R({x,a}) = \beta \mathbb E [ V(\hat{X}) - R(\hat{X}) ] = \beta \mathbb E [ \max_{a'} Q_V(\hat{X},a) - \max_{a'} Q_R(\hat{X},a') ]](https://appliedprobability.blog/wp-content/uploads/2019/01/56fbea25a1c8542bbc470c0cda7bbf13.png?w=840)

Thus

Since the only solution to this inequality is

and thus

So solutions to the Bellman equation are unique for discounted programming. Finally we must show that if we can find a policy that solves the Bellman equation, then it is optimal.

If we find a function and a function

such that

![R(x) = \max_{a\in {\mathcal A} } \left\{ r(x,a) + \beta \mathbb{E}_{x,a} \left[ R(\hat{X}) \right] \right\}, \quad \pi(x) \in \argmax_{a\in \mathcal A} \left\{ r(x,a) + \beta \mathbb E_{x,a} \left[ R(\hat{X}) \right] \right\}](https://appliedprobability.blog/wp-content/uploads/2019/01/a663f0355881be58c54a2d9eab5e0aec.png?w=840)

then note that the MDP induced by is a Markov chain (with transition matrix

). Both

and

solve the equation

. So by Prop 2 in Markov Chains: A Quick Review,

.

It is worth collating together a similar result for -factors. Given the facts accrued about value function and Bellman’s equation. The following Proposition should not be too great a surprise (and can be skipped on first reading).

Prop. a) Stationary -factors satisfy the recursion

![Q_\pi(x,a) = \mathbb E_{x,a} [ r(x,a) + \beta Q_{\pi}(\hat{X},\pi(\hat{X}) )]\, .](https://appliedprobability.blog/wp-content/uploads/2019/01/5effaf4974566d43cbc948cfd5f91a1a.png?w=840)

b) Bellman’s Equation can be re-expressed in terms of -factors as follows

![Q^*(x,a) = \mathbb E_{x,a} [ r(x,a) + \beta \max_{\hat{a}} Q^*(\hat{X},\hat{a}) )]\, .](https://appliedprobability.blog/wp-content/uploads/2019/01/9eb26e4129e9217042c7ea9fb7aa9b71.png?w=840)

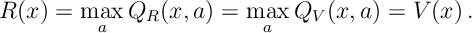

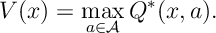

The optimal value function satisfies

c) The operation

![F_{x,a} (\bm Q) = \mathbb E_{x,a} [ r(x,a) + \beta Q_{\pi}(\hat{X},\pi(\hat{X}) )]\,](https://appliedprobability.blog/wp-content/uploads/2019/01/ceb645fd545b1dc4f1e821386ec4c73c.png?w=840)

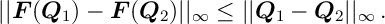

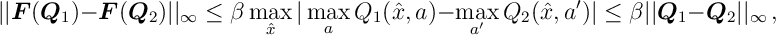

is a contraction with respect to the supremum norm, that is,

Proof. a) We can think of extending the state space of our MDP to include states as well as

. In this new MDP we can assume that initially the MDP starts in state

then moves to the state

according to the transition probabilities

. There after it remains in

moving according to policy

. Thus by Prop 2 in Markov Chains: A Quick Review

![Q_\pi(x,a) = \mathbb E_{x,a} [ r(x,a) + \beta R(\hat{X},\pi )]](https://appliedprobability.blog/wp-content/uploads/2019/01/5e64a48f439aa064eb4abc6fe2bf8c86.png?w=840)

where is the reward function of policy

. Further since

is the value from taking

instead of following policy

to should also be clear that

![Q_\pi(x,\pi) = \mathbb E_{x,\pi(x)} [ r(x,\pi(x)) + \beta R(\hat{X},\pi )] = R(x,\pi )](https://appliedprobability.blog/wp-content/uploads/2019/01/6eccf6f748daa022ef081846de2f0f96.png?w=840)

Thus, as required,

![Q_\pi(x,a) = \mathbb E_{x,a} [ r(x,a) + \beta Q_{\pi}(\hat{X},\pi(\hat{X}) )]\, .](https://appliedprobability.blog/wp-content/uploads/2019/01/5effaf4974566d43cbc948cfd5f91a1a.png?w=840)

b) Further it should be clear that the optimal value function for the extended MDP discussed has a Bellman equation of the form

![\begin{aligned} Q^*(x,a) &= \mathbb E_{x,a} [ r(x,a) + \beta V(\hat{X})] \\ V(x) & = \max_{a\in\mathcal A} \mathbb E_{x,a} [ r(x,a) + \beta V(\hat{X})]\end{aligned}](https://appliedprobability.blog/wp-content/uploads/2019/01/fb38d9307c773ff8a70338c465ab9395.png?w=840)

Comparing the first equation above with the second, it should be clear that and substituting this back into the first equation gives as required

![Q^*(x,a) = \mathbb E_{x,a} [ r(x,a) + \beta \max_{\hat{a}\in \mathcal A} Q^*(\hat{X},\hat{a}) )]\, .](https://appliedprobability.blog/wp-content/uploads/2019/01/b20f94975f009078b100d7c35f33143a.png?w=840)

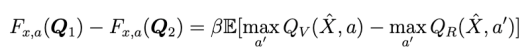

c) The proof of this part is already embedded in the previous Theorem. Note that

Thus

as required.

as required.

One thought on “Infinite Time Horizon”