(This is a section in the notes here.)

We want to calculate probabilities for different events. Events are sets of outcomes, and we recall that there are various ways of combining sets. The current section is a bit abstract but will become more useful for concrete calculations later.

Operations on Events.

Definition [Union] For events , the union of

and

is the set of outcomes that are in

or

. The union is written

We can say “

We can say “ or

” as well as “

union

”.

Definition [Intersection] For events , the intersections of

and

is the set of outcomes that are in both

and

. The union is written

We can say “

We can say “ and

” as well as “

intersection

”.

Definition [Complement] For event , the complement of

is the set of outcomes in the sample space

that are not in

. We denote the complement of

with1

We can say “not

We can say “not ”.

Definition [Relative Complement] For events , the relative complement of

relative to

is the set of outcomes not in

that are in

. The relative complement of

relative to

is written

We can say “A not B”.

We can say “A not B”.

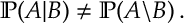

Warning! Later we will define to denote the probability of

given

. The line

in

is straight and whereas line

in

in the relative complement is not a straight. So be warned that, in general,

Here are a few facts about sets

Lemma 1. For events

i)  ii)

ii)  iii)

iii)  iv) (De Morgan’s Laws)

iv) (De Morgan’s Laws)  v) If

v) If then

.

vi) .

Remarks.

The proof of each statement above is quite straightforward. For each statement, we can draw a Venn diagram (i.e. we draw 1,2, or 3 intersecting circles and shade the appropriate areas to check the result.) For instance, the first statement in Lemma 1iii) can be seen in Figure 1, below.

Figure 1. A Venn Diagram verifying that

From i) and ii) we see that it does not matter what order we apply unions and intersections. For that reason, when we apply a sequence of unions or a sequence of intersections we can unambiguously write

The statement iii) is analogous to the statement that

.

In De Morgan’s Laws, iv), we can think of the complement of union being intersection. I.e.

and

. 2 Thus

Probability Rules for Operations on Sets

We can now start to think about what these operations on events imply for the probabilities of those events. Here are a sequence of lemma to this effect. (You can skip the proofs on first reading.)

Lemma 2. If and

have not outcome in common, i.e.

, then3

Proof.

Lemma 2 can be extended to a countable set of sets with

. So

The above equality is actually an axiom of probability.

The above equality is actually an axiom of probability.

Lemma 3. If then

Proof. Using Lemma 1ii) and v),  Using Lemma 2 and the fact probabilities are non-negative

Using Lemma 2 and the fact probabilities are non-negative

Suppose I have a statement like “I have a red car” this implies “I have a car”. So the probability “I have a car” is more than the probability “I have a red car”. The above shows that this inequality holds for any two statements where one implies the other.

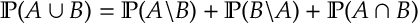

Lemma 4.

(An intuitive proof of this lemma: draw a Venn diagram with events and

. Notice if we colour in

and then colour in

then we end up colouring

twice. So if we want to count that region once, which we do for the event

, we need to subtract

once.)

Proof. Note that  where as

where as ![\begin{aligned}

\mathbb P (A )+\mathbb P (B )

=&

[ \mathbb P (A \backslash B ) +\mathbb P (A \cap B)] + [\mathbb P(B\backslash A) + \mathbb P (A \cap B)]

\notag

\\

=

&

\mathbb P (A \backslash B ) + \mathbb P (B \backslash A )

+ 2 \mathbb P(A \cap B) \, .\label{eq:op3-2}\end{aligned}](https://appliedprobability.blog/wp-content/uploads/2021/11/bd0c556affb17c39833ad1e82fac8014.png?w=840) Subtracting from gives

Subtracting from gives  Rearranging the above expression gives the result.

Rearranging the above expression gives the result.

It is often easy to calculate from

and

(see later discussion on independence). The above lemma gives a way to access

from

.

Lemma 5.

Proof. while

. Also

since probabilities sum to one (see Definition [probdef]). So

Rearranging gives the required result.

Rearranging gives the required result.

Sometimes the number of outcomes in is large. So we may need to sum the probability of a lot of different outcomes to get

. However, it may be that

only has a few outcomes. So it may be simple to calculate

. The above lemma then gives us a way to get

.