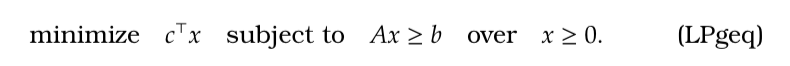

A linear program is a constrained optimization problem with a linear objective, , and linear functional constraints,

, we could for instance write

- Here

is a vector,

is an

matrix with row vectors

and, as before,

and

and

.

- A linear program could have some equality constraints

or could optimize some variables over

over

. Even so, with the addition of extra variables, constraints and suitable choice of

,

and

, the above optimization can express any linear program.

- There are various ways to interpret . To start with, we could interpret

as the running cost of

different machines types. The vector

could represent the required rate of production of

different goods. If a matching

makes good

at rate

, the

component of

, then a constraint

must be met and the optimization asks to minimize the cost of production subject to the production constraints.

- Because our objective and constraints are convex, we can apply the Lagrangian approach.

Lemma 1. The dual of is the optimization

Proof. Including slack variables , we minimize the Lagrangian with Lagrange multipliers

So by maximizing, the dual of is as required:

Lemma 2. If is feasible for and

is feasible for and they obey the complementary slackness conditions

and

then

is optimal for and

is optimal for .

Proof. By Weak Duality, for all primal feasible and dual feasible

,

. But for

and

as above,

. Thus we see the primal and dual are equal and thus these

and

are optimal.

The set is the intersection of

half-spaces. In other words, the feasible set is a convex polytope.

- An extreme point point of a convex polytope

(or indeed any convex set) is a point

such that if

for some

then

.

- For a polytope we think of an extreme point as a point on the corner of the shape.

Theorem 1. Any linear program with a finite optimum has at least one optimum at an extreme point.

So, we just need to check extreme points to find an optimum. Let’s consider the optimization

which, as we know, can be made equivalent to .

which, as we know, can be made equivalent to .

Consider matrix

and let

where

. We let

be the

submatrix of

formed by removing the columns not in

. We call the matrix

a basis.1 Given

and

, we use the notation

,

and

where here elements are understood to be ordered relative to and

. If

, i.e. non-basis elements are zero, and

then we call

a basic solution. We call a positive basic solution a basic feasible solution (bfs).

Henceforth, we make the following assumptions on the matrix and vector

.

Assumption 1. The rows of are linearly independent.

Assumption 2. Every basic solution has exactly

non-zero variables.

Assumption 3. Every submatrix of

is non-singular.

- Assumption 1 removes redundant constraints from optimization .

- Assumption 3 implies that the rows of basis

are a basis of vector space

.

- Assumptions 2 and 3 can be made to hold by an infinitesimally small perturbation of the entries of

.

- Assumptions 2 and 3 imply that each basis

uniquely specifies a bfs

.

The extreme points for the feasible set of can be found.

Theorem 2. The extreme points of are exactly the set of basic feasible solutions.

- Finding a extreme point is now characterized and computable, namely, we find

where

.

Theorem 3. [Simplex Theorem] Given Assumptions 1–3, for , a basic feasible solution is optimal iff

Moreover, if is not optimal, i.e.

for some

, then there exists a new basis

for some

such that the basic feasible solution

satisfies

.

- This theorem gives both a method to verify optimality of a bfs and a mechanism to move to a better bfs from a non-optimal bfs. As we will discuss, this method is called the Simplex Algorithm.

- For a maximization, the inequality in is reversed.

- Since

, optimality condition can be expressed as

- The vector

, defined above, is called the reduced cost vector and its entries are reduced costs.

- We can interpret

as variables of the dual of . So once

, we have a dual feasible solution

and a primal feasible solution

. Thus, by essentially the same argument we used in Lemma [LP:primaldualcomp], both

and

are optimal for the primal and the dual problems respectively.

Proof of the Simplex Theorem. Let’s compare bfs to a feasible solution

. As

is feasible

. Thus, using Assumption 3,

So

So

Thus, if holds then the bfs is optimal. Conversely, by Assumptions 2 and 3, for any

sufficiently small, i.e.

s.t.

for some

, we can choose

so that

is feasible. Namely,

is given by , and it is positive because it is close to

. Hence

This verifies that is necessary and sufficient for optimality.

Now suppose that bfs is not optimal, i.e.

for some

. This suggests we should increase the

-th component. Take

where

is the

th unit vector in

and

. Note

So the larger is the more

improves on

. The biggest

so that

is feasible is

Observe, if no such exists then

is unbounded. Otherwise, take

to be the argument minimizing . Note

is a bfs on

with

.

- When

is known, we can use the term “basis” interchangeably to mean

or

.↩

Thanks! Could you give me a good link that introduces this business of this ‘dual’ concept in optimization? I often see it but I don’t understand how it comes about or what it is.

LikeLike

Hi Naren,

I’ve updated the post on Lagrangian Optimization. If you read everything up to Theorem 2 on that post. You should have everything you need.

LikeLike

Thank you!

LikeLike