We consider one of the simplest iterative procedures for solving the (unconstrainted) optimization

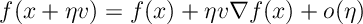

where is a convex function twice differentiable function. For fixed

If , the gradient is most negative when

. Thus, starting from

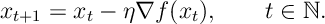

define the gradient descent algorithm:

- We assume

the Hessian matrix of

at

satisfies

, for some

.So, by a Taylor expansion

- We assume

. So the right-hand inequality above guarantees that

for all

.

- We assume there is a unique finite solution to our optimization.1

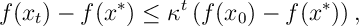

We now see our algorithm converges exponentially fast to its solution, so-called linear convergence.

Given the assumptions above there exists and

such that

Rewriting , we have

Using lower bound on the Hessian, we bound with the following Taylor expansion

C

C

ombining the last two inequalities, we have as required,

![f(x_{t+1}) - f(x^*) \leq \underbrace{(1- 4b\eta (1-B \eta))}_{=\kappa} \Big[ f(x_{t}) - f(x^*) \Big].](https://appliedprobability.blog/wp-content/uploads/2017/07/19e97e15847b9701f6e8f421909e93e1.png?w=840)

Finally for , we reapply our Hessian lower-bound assumption and use the above bound for :

Projected Gradient Descent

We consider a simple iterative procedure for solving a constrained optimization

where is a convex function twice differentiable function and where

is some non-empty closed convex set, eg.

. Like in Section [GD]we want to follow the steepest descent direction

. However, such points need not belong to

. Thus we consider the projection of

on to

:

and then, from , we define the projected gradient descent by

- After projecting it need not be true that

. Thus we adjusted the step-size

and our proof will study the distance between

and the optimal solution

rather than the gap

.

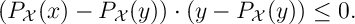

• The key observation is that making a projection cannot increase distances

[PGD:proj]

For all , we must have

, i.e. the plane

separates

from

. If this were not true, then we would have a contradiction, in particular, there would be a point on the line joining

and

that is closer to

. We thus have

Adding together and then applying Cauchy-Schwartz implies

as required.

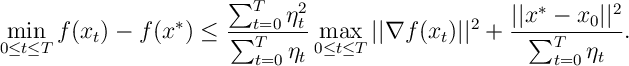

If the gradient of iterates are bounded, then

Hence if ,

and

then

.

Recurring the above expression yields  Rearranging the above gives the required result.

Rearranging the above gives the required result.

- This assumption is not really needed, but it saves space.↩