We consider the Robbins-Monro update

![x_{n+1} = x_n +\alpha_n [g(x_n) + \epsilon_n]](https://appliedprobability.blog/wp-content/uploads/2020/02/bc12abe703df0485b1fb7d4a6cd6a05c.png?w=840)

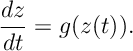

and argue that this can be approximated by the o.d.e.:

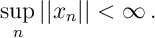

The condition for convergence used was

Put informally, the condition is used to keep the process moving [albeit in decreasingly small increments] while the condition

ensures that the noise from the process goes to zero.

Given that noise is suppressed and increments get small, it is natural to ask how close the above process is to the ordinary differential equation

Moreover, can Lyapunov stability results [that we applied earlier] be directly applied to prove the the convergence of the corresponding stochastic approximation scheme? Often, the answer is yes. And this has certain conceptual advantages, since the Lyapunov conditions described earlier can be quite straightforward to establish and also we don’t need to directly deal with the compounding of small stochastic errors from the sequence .

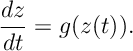

The set up. The idea is to let

Here represents the amount of “time” that the process has been running for. We then let

We let be the solution above o.d.e. started at

at time

, that is

We then compare and

to see how much error has accumulated since time

. More specifically we are interested in

![\sup_{t\in [t_m,t_m+T]\cap \mathcal T} || x(t) - z_m(t) ||\, .](https://appliedprobability.blog/wp-content/uploads/2020/02/f111ba5dfa48d2b2bdb6ceed5702a875.png?w=840)

Assumptions. In addition to the Robbins-Monro condition , we make the following assumptions.

is Lipschitz continuous.

- For

![\mathbb E [ \epsilon_n | F_n ] = 0](https://appliedprobability.blog/wp-content/uploads/2020/02/56d070530a2d5c0c8f310ca52d2eda6b.png?w=840) and

and .

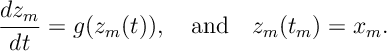

Main result. A key result that we will prove is the following proposition

Proposition 1.

![\lim_{m\rightarrow \infty} \sup_{t\in [t_m,t_m+T]\cap \mathcal T} || x(t) - z_m(t) || = 0\, .](https://appliedprobability.blog/wp-content/uploads/2020/02/8d3601931c653e39625fd0c0558b61a0.png?w=840)

where convergence holds with probability and in

.2

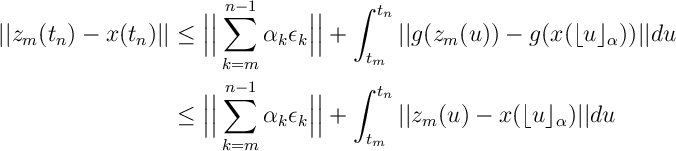

Proof. Notice

while

where . So

which implies by Gronwall’s Lemma

where the final inequality holds for such that

, and we define

. Notice that

,

, is a martingale and further

![\mathbb E M_n^2 = \sum_{k=1}^n \alpha \mathbb E [ \epsilon_k^2] \leq K\sum_{k=1}^\infty \alpha_k^2 <\infty](https://appliedprobability.blog/wp-content/uploads/2020/02/dcc52b7daa5c7b61b5513c43d37e567f.png?w=840)

where . Thus

is an

bounded Martingale and thus convergence with probability

and in

. Consequently

is a cauchy sequence, meaning

with probability

with probability and in

. Applying this to gives the required result

![\lim_{m\rightarrow\infty} \sup_{t\in [t_m,t_m+T]\cap \mathcal T} || z_m(t) - x(t) || = 0\, .](https://appliedprobability.blog/wp-content/uploads/2020/02/542342ec0effea38692cb5930e0984a1.png?w=840)

QED.

Applying the o.d.e limit.

Before we focus on the proof of Proposition 1 it’s worth explaining how it can be applied. The main idea is to

- Check that the o.d.e. convergence by showing gets close to the some desired set of points

in

time units for each initial condition

,

.

- Then apply Proposition 1 to show that the stochastic approximation is also close to the o.d.e at time

.

This is sufficient to show that the stochastic approximation converges to .

Here, we combine La Salle’s Invariance Principle, Theorem 2, with Proposition 1, though, in principle, we could have considered any of the ode results from Lyapunov Functions. We assume that the assumptions of Proposition 1 are satisfied. In addition we assume,

- Almost surely,

Further we recall that for La Salle’s Invariance Principle, we assumed there was a Lyapunov function such that

is continuously differentiable .

for all

.

- The sets

are compact for all

.

Also we defined . Recall that La Salle’s Invariance Principle stated that for all solutions to the o.d.e.

with

there exists a

such that

for all

.

Theorem 1. For the stochastic approximation scheme

![x_{n+1} = x_n + \alpha_n [g(x_n) + \epsilon_n ]](https://appliedprobability.blog/wp-content/uploads/2020/02/6749a0d76c00186ec81b0e68dfcab9a7.png?w=840)

described in Proposition 1 and given a Lyapunov function as described above, it holds, with probability

,

![x_n \xrightarrow[n\rightarrow \infty]{} \mathcal X^\star](https://appliedprobability.blog/wp-content/uploads/2020/02/bd4664fa401523ac509b4798f53ea4a2.png?w=840)

Proof. First we check that the o.d.e solutions considered in Proposition 1 are going to satisfy the conditions of La Salle. In particular, La Salle’s result requires o.d.e. solutions to all belong to some compact set. Notice, since we assume that

we can let

and take

. Since

is decreasing for all solutions to the o.d.e. We see that

for all

and

.

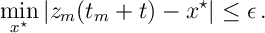

Next we set up the bounds from the two results. From La Salle’s Invariance Principle, we know that

such that

and

Taking this choice of , we know from Proposition 1 that there exists

s.t.

![\sup_{t \in [t_m , t_m + 2T]\cap \mathcal T} || x(t) - z_m(t) || \leq \epsilon.](https://appliedprobability.blog/wp-content/uploads/2020/02/64d7b13f3bcc20614b24f19ba5284b8d.png?w=840)

Notice we can take suitably large so that

for all

. Notice this implies that

![\bigcup_{m : m \geq m^\star } [ t_m +T, t_m +2T] = [t_{m^\star} + T,\infty)\, .](https://appliedprobability.blog/wp-content/uploads/2020/02/75ed0bda62659f424fff89a4ed747d3f.png?w=840)

Now notice that if is such that

for some

then combining the inequalities above, gives that

Thus we see, with the union above, that for all it holds that

. In other words

as required. QED.

References

The o.d.e approach to stochastic approximation was initiated by Ljung. Shortly after it is was extensively developed by Kushner, see below for two text book accounts. The arguments above loosely follow the excellent text of Borkar. We shorten the proof in several ways and consider convergence. A further text with a general treatment is Benveniste et al.

.Benveniste, M. Metivier, and P. Priouret. Adaptive algorithms and stochastic approxima- tions, volume 22. Springer Science & Business Media, 2012.

V. S. Borkar. Stochastic approximation: a dynamical systems viewpoint, volume 48. Springer, 2009.

H. Kushner and G. G. Yin. Stochastic approximation and recursive algorithms and applications, volume 35. Springer Science & Business Media, 2003.

H. J. Kushner and D. S. Clark. Stochastic approximation methods for constrained and un- constrained systems, volume 26. Springer Science & Business Media, 1978.

Ljung. Analysis of recursive stochastic algorithms. IEEE Transactions on Automatic Control, 22(4):551-575, 1977.