- High level idea: Policy Improvement and Policy Evaluation.

- Value Iteration; Policy Iteration.

- Temporal Differences; Q-factors.

For infinite time MDPs, we cannot apply to induction on Bellman’s equation from some initial state – like we could for finite time MDP. So we need some algorithms to solve MDPs.

At a high level, for a Markov Decision Processes (where the transitions are known), an algorithm solving a Markov Decision Process involves two steps:

- (Policy Improvement) Here you take your initial policy

and find a new improved new policy

, for instance by solving Bellman’s equation:

![\pi(x) \in \argmax_{a\in \mathcal A} \left\{ r(x,a) + \beta \mathbb{E}_{x,a} \left[ R(\hat{X},\pi_0) \right] \right\}](https://appliedprobability.blog/wp-content/uploads/2018/02/b498c90d70da814b5e8114fea4f09f37.png?w=840)

- (Policy Evaluation) Here you find the value of your policy. For instance by finding the reward function for policy

:

![R(x,\pi) = \mathbb E^\pi_{x} \left[ \sum_{t=0}^\infty \beta r(X_t,\pi(X_t))\right]](https://appliedprobability.blog/wp-content/uploads/2018/02/f7270d2c72c480595dd2bb86a83192a4.png?w=840)

Value iteration

Value iteration provides an important practical scheme for approximating the solution of an infinite time horizon Markov decision process.

Def. [Value iteration] Take

and recursively calculate

![\begin{aligned} \pi_{s+1}(x) \in & \argmax_{a\in {\mathcal A} } \left\{ r(x,a) + \beta \mathbb{E}_{x,a} \left[ V_s(\hat{X}) \right] \right\} \\ V_{s+1}(x) & = \max_{a\in {\mathcal A} } \left\{ r(x,a) + \beta \mathbb{E}_{x,a} \left[ V_s(\hat{X}) \right] \right\}\end{aligned}](https://appliedprobability.blog/wp-content/uploads/2018/02/cb6aeccf69eee99c3b72edba44cc8614.png?w=840)

for this is called value iteration.

We can think of the two display equations above, respectively, as the policy improvement and policy evaluation steps. Notice, that we don’t really need to do the policy improvement step to do each iteration. Notice the policy evaluation step evalutes one action under the new policy afterwards the value is

.

Similarly we can define value iteration for minimization problem:

![L_{s+1}(x) = \min_{a\in {\mathcal A} } \left\{ l(x,a) + \beta \mathbb{E}_{x,a} \left[ L_s(\hat{X}) \right] \right\}.](https://appliedprobability.blog/wp-content/uploads/2018/02/3d620db9ed972ddce3e09a77d45372f3.png?w=840)

Each iteration of value iteration improves the solution:

Ex 1. For reward function define

![\mathcal L R(x) = \max_{a\in {\mathcal A} } \left\{ r(x,a) + \beta \mathbb{E}_{x,a} \left[ R(\hat{X}) \right] \right\}.](https://appliedprobability.blog/wp-content/uploads/2018/02/a3e1fb623f3a80aaa02c31922e54a94f.png?w=840)

Show that if for all

then

for all

.

Ans 1.

![r(x,a) + \beta \mathbb{E}_{x,a} \left[ R(\hat{X}) \right] \geq r(x,a) + \beta \mathbb{E}_{x,a} \left[ \tilde{R}(\hat{X}) \right].](https://appliedprobability.blog/wp-content/uploads/2018/02/99582ce5a214079285c7b972a949a0ef.png?w=840)

Now maximize both sides over .

Ex 2. [Continued] Show that for value iteration with positive programming

Ans 2. Now repeatedly apply [1].

Ex 3. [Continued][IDP:Cont_2] Show that for value iteration with negative programming

Ans 3. Identical idea as [1-2].

So we know Value Iteration improves the value function on each step. We now need to argue the value iteration gets to the optimal solution.

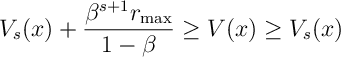

Ex 4. Show that for discounted programming

Ans 4. Note that is the optimal solution to the finite time MDP with

steps, recall in Def [MDP:Def]. Thus,

Now let .

Ex 5. Show that for positive programming

Ans 5. Take any policy . Then

Now take limits . Now maximize over

.

Ex 6. Show that for negative programming with a finite number of actions

Ans 6. Same idea as [5].

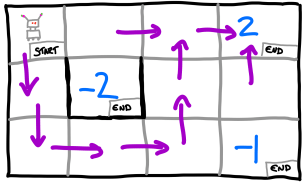

Ex 7. [GridWorld] A robot is placed on the following grid.

The robot can chose the action to move left, right, up or down provided it does not hit a wall, in this case it stays in the same position. (Walls are colored black.) With probability 0.8, the robot does not follow its chosen action and instead makes a random action. The rewards for the different end states are colored above. Write a program that uses, Value Iteration to find the optimal policy for the robot.

Ans 7. Notice that the robot does not just take the shortest root. (I.e. some forward planning is required)

Policy Iteration

We consider a discounted program with rewards and discount factor

.

Def 2. [Policy Iteration] Given the stationary policy , we may define a new (improved) stationary policy,

, by choosing for each

the action

that solves the following maximization

![{\mathcal I}\Pi (x) \in \argmax_{a\in{\mathcal A} } \; r(x,a) + \beta \mathbb{E}_{x,a} \left[ R(\hat{X},\Pi) \right]](https://appliedprobability.blog/wp-content/uploads/2018/02/f62894b144333bf596b79660d82fccf9.png?w=840)

where is the value function for policy

. We then calculate

. Recall that for each

this solves the equations

![R(x,\mathcal I \Pi) = r(x,\mathcal I \Pi (x)) + \beta \mathbb{E}_{x,a} \left[ R(\hat{X},\mathcal I \Pi) \right]](https://appliedprobability.blog/wp-content/uploads/2018/02/3989a96503a5e70fdbb5236cf232520d.png?w=840)

Policy iteration is the algorithm that takes

Starting from some of the tree stationary policy .

Thrm 1. Under Policy Iteration

and, for bounded programming,

Ex 8 [Proof of Thrm 1] Under the policy iteration

(Hint: requires Ex 8 & Ex 10 from Markov Chains)

Ans 8. By Ex 8 from Markov Chains, and the optimality of with respect to

we have

![\begin{aligned} R(x,\Pi) = r(x,\pi(x)) + \beta \mathbb E_{x,\pi(x)} \left[ R(\hat{X},\Pi) \right] \leq r(x,\mathcal I \Pi (x)) + \beta \mathbb E_{x,\mathcal I \pi(x)} \left[ R(\hat{X},\Pi) \right]\end{aligned}](https://appliedprobability.blog/wp-content/uploads/2018/02/481ce83141f0956ced2927eff942117a.png?w=840)

Applying Ex 10 from Markov Chains gives the result.

Ex 9. [Continued]

![r(x,a) + \beta \mathbb E_{x,a} \left[ R(\hat{X},\Pi) \right] \geq R(x,\mathcal I \Pi)](https://appliedprobability.blog/wp-content/uploads/2018/02/877c8f31a75bfa71ae0af1d0faa15aa5.png?w=840)

Ans 9.

![\begin{aligned} r(x,a) + \beta \mathbb E_{x,a} \left[ R(\hat{X},\Pi) \right] & \leq r(x,\mathcal I \pi(x)) + \beta \mathbb E_{x,\mathcal I(x)} \left[ R(\hat{X},\Pi) \right] \\ & \leq r(x, \mathcal I \pi(x)) + \beta \mathbb E_{x,\mathcal I \Pi} \left[ R(\hat{X},\Pi) \right] = R(x,\mathcal I \Pi) \end{aligned}](https://appliedprobability.blog/wp-content/uploads/2018/02/42b50fccf11ab6411b9a495e4a400624.png?w=840)

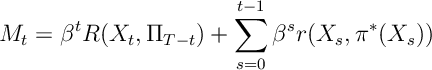

We now proceed via a Martingale argument. Define

where is the optimal policy.1

Ex 10. [Continued] Show that is a supermartingale with respect to

.

Ans 10. Taking expectations with respect to the optimal policy

![\begin{aligned} &\mathbb E^* \left[ M_{t+1} - M_t | \mathcal F_t \right] \\ & = \beta \mathbb E^* \left[ \beta R(X_{t+1},\Pi_{T-t-1}) + r(X_t, \pi^*(X)t) - R(X_t,\Pi_{T-t}) \Big| \mathcal F_t \right] \\ & = \beta^t \mathbb E^* \left[T \beta \mathbb E^*_{X_t,\pi^*(X_t)} \left[ \beta R(\hat{X},\Pi_{T-t-1}) + r(X_t,\pi^*(X_t)) - R(X_t,\Pi_{T-t}) \right] \Big| \mathcal F_t \right] \\ & \geq 0\end{aligned}](https://appliedprobability.blog/wp-content/uploads/2018/02/22bc92b9fa8af21660e4d831073d1a40.png?w=840)

The inequality at the end follows by [9].

Ex 11. [Continued]

Ans 11. Since is a supermartingale:

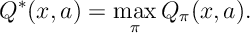

![\begin{aligned} R(x,\Pi_T) = \mathbb E^*_x \left[ M_0 \right] \geq \mathbb E^*_x \left[ M_T \right] = \underbrace{ \mathbb E^*_x \left[ \beta^T R(X_T,\Pi_0) \right] }_{ \xrightarrow[T\rightarrow \infty]{} 0 } + \underbrace{ R_T(x,\Pi^*) }_{ \xrightarrow[T\rightarrow \infty]{} V(x) }\end{aligned}](https://appliedprobability.blog/wp-content/uploads/2018/02/b59443fbdc775c33838667d86cf1f5f7.png?w=840) Therefore, as required,

Therefore, as required,

Ex 12. [GridWorld, again] Write a program that uses, Policy iteration to find the optimal policy for the robot in [7].

Ans 12.

Temporal Difference Iteration

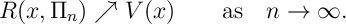

We now discuss how temporal differences, defined below, relate to value iteration and policy iteration. From define a parameterized set of policies that have value iteration and policy improvement as special cases.

Def 3 [Temporal Differences] For a MDP, reward function , and an action

, the temporal differences are

The following exercises show that the Bellman equation and the definition of a stationary policy can be phrased in terms of temporal differences.

Ex 13. [Bellman Equation with Temporal Differences] Show that if is not optimal value function then

![\max_{a\in \mathcal A}\left\{ \mathbb E_{x,a} \left[ d_a(x,\hat{X}) \right] \right\} \geq 0.](https://appliedprobability.blog/wp-content/uploads/2018/02/a8affd977342aedd1770a6a6e2f5ddf2.png?w=840)

Ex 14. [Continued] Show that if is optimal value function then

![\max_{a\in \mathcal A}\left\{ \mathbb E_{x,a} \left[ d_a(x,\hat{X}) \right] \right\} = 0.](https://appliedprobability.blog/wp-content/uploads/2018/02/470375a774c6703774f9bc3fc9ec6782.png?w=840)

Ex 15. [Stationary rewards] Show that the reward function for a stationary policy satisfies,

![\mathbb E_{x,\pi} \left[ d_{\pi(x)}(X_0,{X}_1) \right] =0,\qquad x\in \mathcal X.](https://appliedprobability.blog/wp-content/uploads/2018/02/fd7c2446ef2b4da4bf064ee5927e483e.png?w=840)

We now consider how temporal differences are used to update our value function in value iteration and policy iteration.

Ex 16. [Policy improvement] Show that policy improvement for value iteration and policy iteration are given by

![\pi(x) = \argmax_{a\in \mathcal A} \mathbb E_{x,a} \left[ d_a(x,\hat{X})\right]](https://appliedprobability.blog/wp-content/uploads/2018/02/2e31258c090d047e6fdbce79b0f3b063.png?w=840)

Ex 17. [Continued, Value iteration] Show that policy evaluation under value iteration, updates the value function to

according to

![V(x) = V_0(x) + \mathbb E_{x,\pi(x)} \left[ d_a(x,\hat{X})\right]](https://appliedprobability.blog/wp-content/uploads/2018/02/ff635e278b2683d1f1070c20b41b7126.png?w=840)

where is as given in the policy improvement step.

(i.e. the temporal difference gives the change in the value function.)

Ex 18. [Continued, Policy Iteration] Show that policy evaluation under policy improvement, update that value function to

according to

![V(x) = V_0(x) + \mathbb E_{x,\pi} \left[ \sum_{t=0}^\infty \beta^t d(X_t,X_{t+1}) \right]](https://appliedprobability.blog/wp-content/uploads/2018/02/a117ecfd4f69161f66811f93f74c048b.png?w=840)

Lambda-Policy Improvement

Notice that both value iteration and policy improvement have exactly the same policy improvement step:

![{\pi} (x) \in \argmax \Big\{ r(x,a) + \beta \mathbb E_{x,a} \left[ V_0(x)\right]\Big\}](https://appliedprobability.blog/wp-content/uploads/2018/02/6ace1f7ab376074fb00368c9fe719ba2.png?w=840)

where is the value attributed to the previous policy. However, they differ in how the evaluate the policy. In particular, value iteration considers one step under the new policy:

![{V}(x) := \mathbb E^{{\pi}}_{x} \left[ r(X_0,{\pi}(X_0)) + \beta V_0({X}_1) \right],](https://appliedprobability.blog/wp-content/uploads/2018/02/6295299d86408833d3eb87a5e002b659.png?w=840)

where as policy iteration takes an infinite number of steps:

![{V}(x) := \mathbb E^{\pi} \left[ r(X_0,{\pi}(X_0)) + \beta r(X_1,{\pi}(X_1)) + \beta^2 r(X_2,{\pi}(X_2)) +\dots \right].](https://appliedprobability.blog/wp-content/uploads/2018/02/e1231daedfdfe58d7ad81103b250767b.png?w=840)

The Temporal Difference method takes a geometrically distributed2 number of steps:

![V(x) := \mathbb E^{\pi} \left[ \sum_{t=0}^{\tau_\lambda} \beta^t r(X_t,\pi(X_t)) + \beta^{\tau_\lambda + 1} V_0(X_{\tau_\lambda + 1}) \right].](https://appliedprobability.blog/wp-content/uploads/2018/02/484aa6a94d8818e338c3bfa48ee72660.png?w=840)

where is a geometrically distributed random variable on

with probability of success

).

Ex 19. [Value function from switching policies] Let be value function of a stationary policy

and let

be value function of a stationary policy

.

Let be the policy that follows

for a geometrically distributed time with success probability

and there after follows policy

. Show that the value function of

satisfies

![V^{(\lambda)} (x) = \mathbb E^{\pi} \left[ \sum_{t=0}^{\tau_\lambda-1} \beta^t r(X^{(1)}_t,\pi^{(1)}(X^{(1)}_t)) + \beta^{\tau_\lambda } V_0\big(X^{(1)}_{\tau_\lambda }\big) \right].](https://appliedprobability.blog/wp-content/uploads/2018/02/b9a75daa88a3f8a089306fced2583878.png?w=840)

Ans 19. Should be obvious from discussion above.

Ex 20. [Continued, Bellman Equation] Argue that also satisfies the recursion

![V^{(\lambda)} (x) = r(x,\pi^{(1)}(x)) + \beta \mathbb E_{x,\pi^{(1)}(x)} \left[ \lambda V^{(\lambda)}(\hat{X}) + (1-\lambda) V^{(0)} (\hat{X}) \right]](https://appliedprobability.blog/wp-content/uploads/2018/02/c650d2420e3ff57222e9ec3b37577928.png?w=840)

Ans 20. [ADP:TD_1] This is recursion for the value function of this Markov chain, see [8] from Markov Chains. At each jump, with probability ,

continues and, with probability

,

stops and takes value

.

Ex 21. [Continued, relation with Temporal Differences] Show that ![V^{(\lambda)} ( x) = V^{(0)} (x) + \sum_{t=0}^\infty \beta^t \lambda^t \mathbb E\left[ d_{\pi^{(0)}} ( X_t, X_{t+1} ) \right]](https://appliedprobability.blog/wp-content/uploads/2018/02/ee5a917f55b06bd7be50fd55ae25a7e1.png?w=840)

Ans 21. We use the shorthand &

. From [19],

![\begin{aligned} V^{(\lambda)}(x) & = \mathbb E \left[ \sum_{t=0}^\infty \mathbb I [ \tau_\lambda > t ] \beta^t r_t + \sum_{t=0}^\infty \mathbb I [\tau_\lambda = t ] \beta^t V^{(0)}_t \right] \\ & = \sum_{t=0}^\infty \lambda^t \beta^t\mathbb E [r_t] + \sum_{t=1}^\infty (1-\lambda)\lambda^{t-1} \beta^t V^{(0) }_t \\ & = \sum_{t=0}^\infty \lambda^t \beta^t\mathbb E [r_t] + \beta \sum_{t=0}^\infty \lambda^t \beta^t V^{(0)}_{t+1} - \sum_{t=1}^\infty \lambda^t \beta^t V^{(0)} \\ & = V^{(0)}(x) + \sum_{t=0}^\infty \lambda^t \beta^t \mathbb E \left[ d(X_t,X_{t+1})\right].\end{aligned}](https://appliedprobability.blog/wp-content/uploads/2018/02/cb56adfb089e863f367df57c6d80e044.png?w=840)

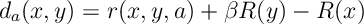

Q-factors

Def 4. [Q-Factor] The -factor of a stationary policy

is the from taking action

and then following policy

there after, that is

![Q_\pi(x,a) = \mathbb E_{x,a} [ r(x,a) + \beta R(\hat{X},\pi))]](https://appliedprobability.blog/wp-content/uploads/2018/02/42de0d4a55491c94f9d5eda83a20cb63.png?w=840)

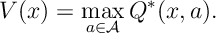

The Q-factor (of the optimal policy) is given by

Ex 22. Show that stationary -factors satisfy the recursion

![Q_\pi(x,a) = \mathbb E_{x,a} [ r(x,a) + \beta Q_{\pi}(\hat{X},\pi(\hat{X}) )]](https://appliedprobability.blog/wp-content/uploads/2018/02/c03fc7a590d63248d1ac00466db2bdef.png?w=840)

Ex 23. Show that Bellman’s Equation can be re-expressed in terms of -factors as follows

![Q^*(x,a) = \mathbb E_{x,a} [ r(x,a) + \beta \max_{\hat{a}} Q^*(\hat{X},\hat{a}) )]](https://appliedprobability.blog/wp-content/uploads/2018/02/b6aa0d81abbed1cf25b4cf54839d05e7.png?w=840)

Ex 24. Show that the optimal value function satisfies

- Note we are implicity assuming an optimal stationary policy exists. We can remove this assumption by considering a

-optimal (non-stationary) policy. However, the proof is a little cleaner under our assumption.↩

- By keeping things geometrically distributed we preserve the Markov property. This would not hold for other distributions↩