Entropy and Relative Entropy occur sufficiently often in these notes to justify a (somewhat) self-contained section. We cover the discrete case which is the most intuitive.

Entropy – Discrete Case

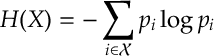

Def 1. [Entropy] Suppose that is a random variable with values in the countable set

and distribution

then

is the entropy of .

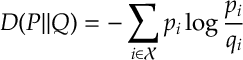

Def 1. [Relative Entropy] For probability distributions $latex {\mathbb P}=(p_i:i\in {\mathcal X})$ and then

is the Relative Entropy of with respect to

.

Ex 1. For a vector with $latex n=\sum_in_i$ , if

then

Ans 1. Take logs and apply the Stirling’s approximation that . So,

Ex 2. Suppose that ,

are IIDRVs with distribution

. Let

be the in empirical distribution of

,

, that is

![\hat{P}^{n}_{i} = \frac{1}{n} \sum_{k=1}^{n} {\mathbb I}[X_k=i]](https://appliedprobability.blog/wp-content/uploads/2017/07/30c54dd1db82350762ba989feb1acb6c.png?w=840)

If for each

then

Ans 2. Note that , so combining with [1] gives

Boltzmann’s distribution

We now use entropy to derive Boltzmann’s distribution.

Consider a large number particles. Each particle can take energy levels ,

. Let

be the average energy of the particles. If we let

be the proportion of particles of energy level

then these constraints are

As is often considered in physics, we assume that the equilibrium state of the particles is the state with the largest number of ways of occurring subject to the constraints on the system. In other words, We solve the optimization

Ex 3. [Boltzmann’s distribution] Show that the solution to is given by the distribution

is called Boltzmann’s distribution. The scaling constant is called the Partition function and the constant

is chosen so that

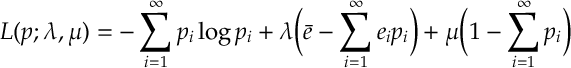

Ans 3. The Lagrangian of this optimization problem is

So, finding a stationary point

which implies

For our constraints to be satisfied, we require that

Thinking of as a function of

, we call

the partition function and notice it is easily shown that

The distribution we derived