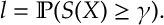

In the Cross Entropy Method, we wish to estimate the likelihood

Here is a random variable whose distribution is known and belongs to a parametrized family of densities

. Further

is often a solution to an optimization problem.

It is assumed that is a relatively rare event, say of order

. One way to estimate

is to take

IID samples

and then average

![\hat{l} = \frac{1}{N} \sum_{i=1}^N {\mathbb I} [S(X_i) \geq \gamma ].](https://appliedprobability.blog/wp-content/uploads/2017/07/e0019c014f5c0b4a802da7159ae18ea3.png?w=840)

This gives us an unbiased estimate. However, because the event is rare, we may require a large number of samples in order to provide a good estimate of

. Importance sampling is a straightforward method that can mitigate these effects. Here sample each

from a different density

and perform the estimate

![\label{CEM:Importance} \hat{l}_g = \frac{1}{N} \sum_{i=1}^N {\mathbb I} [S(X_i) \geq \gamma ] W(x)](https://appliedprobability.blog/wp-content/uploads/2017/07/34a95ce7e9152ff76d7dac3b7289ab41.png?w=840)

where W(x) = f(x)/g(x). Such a change can improve the estimation of . For instance, the choice

gives the correct estimate of

with certainty. Clearly

cannot work in practice; it requires knowledge of

, the parameter which we are estimating. Nonetheless a good estimate of

may be possible. The point of the cross entropy method is to find a reasonable choice for

so that importance sampling can be applied.

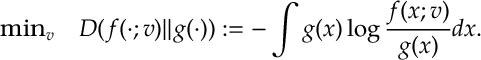

In particular, the cross-entropy method advocates estimating by

according to a parameter choice that minimizes the relative entropy between

and

. Namely,

With a little rearrangement, we see that this is equivalent to the optimization

![\max_v \quad \int {\mathbb I} [S(x) \geq \gamma ] f(x;u) \log f(x; v) dx.](https://appliedprobability.blog/wp-content/uploads/2017/07/25629823ba6ccf99851eb7313397dd3f.png?w=840)

With importance sampling in mind, we apply a change of measure to the above integral to get

![\max_v \quad \int {\mathbb I} [S(x) \geq \gamma ] f(x;w) W(x;u,v) \log f(x; v) dx](https://appliedprobability.blog/wp-content/uploads/2017/07/67b8f9e4fff359e0f461bd700a739bc4.png?w=840)

where . The above optimization may not be explicitly solvable so we replace it with the (importance sampled) optimization

![\label{CEM:Max} \max_v \frac{1}{N} \sum_{i=1}^N {\mathbb I} [S(X_i) \geq \gamma ] W(X_i ; u,w) \log f(x;v) dx](https://appliedprobability.blog/wp-content/uploads/2017/07/b2a657c4dea10ab217cf8423c6544091.png?w=840)

where are IID samples from density

.

In many examples the above optimization in concave and solvable either by descent methods or by differentiating and solving for the condition

![\sum_{i=1}^N {\mathbb I} [S(X_i) \geq \gamma ] W(X_i ; u,w) \nabla \log f(x;v) = 0.](https://appliedprobability.blog/wp-content/uploads/2017/07/44510593f5c2308d7db469a50e9a1c6a.png?w=840)

The reason for incorporating importance sampling into the estimate is because the event could be too unlikely to form an accurate estimate under the choice

. The idea behind the cross entropy method is to apply a multi-level approach where we sequentially increase

and

as follows

- We estimate the

-th percentile of

by taking by ordering the samples

and calculating the empirical

-th percentile, which gives the update

The event is chosen to be reasonably likely, e.g.

. Note that since, in principle, the parameter

is such that less likely

under

when compared with the previous choice of

.

- Given this

, we find

as the best estimate according to our cross-entropy rule . This, in principle, should increase the likelihood of the event

when compared with prior choice of

.

- When

passes the required target level

then the have found a parameter choice that gives reasonable importance to the event

and we can calculate

with importance sampling rule .