We want to optimize the expected value of some random function. This is the problem we solved with Stochastic Gradient Descent. However, we assume that we no longer have access to unbiased estimate of the gradient. We only can obtain estimates of the function itself. In this case we can apply the Kiefer-Wolfowitz procedure.

The idea here is to replace the random gradient estimate used in stochastic gradient descent with a finite difference. If the increments used for these finite differences are sufficiently small, then over time convergence can be achieved. The approximation error for the finite difference has some impact on the rate of convergence.

The Problem Setting

Suppose that we have some function

![F(\theta) = \mathbb E_U [ f (\theta ; U) ],](https://appliedprobability.blog/wp-content/uploads/2022/10/6742d97417befa54c83304070db73641.png?w=840)

that we wish to minimize over . Here

is some random variable.

We suppose that we do not have direct access to the distribution of nor

. But we can sample

and thus we can sample

for different values of

.

We can think of as representing the random reward from a simulator configured under a set of parameters

. We want to maximize the expect reward from the simulator but can only use the information of rewards from different simulation runs.

The Kiefer-Wolfowitz Algorithm

If the problem is reasonably smooth then we can use the Keifer-Wolfowitz proceedure to optimize . Here we use a finite difference approximation of the gradient of

.

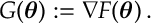

A finite difference approximation. We want to approximate the gradient of , which we denote by

. That is

We define for

where is the

th unit vector. We can then define the finite difference approximation to the gradient

by

It is not hard to show that for a well-behaved function we have that

(Also note that higher-order bounds can also be used here for an improved rate of convergence.)

The Algorithm

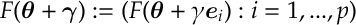

The Kiefer-Wolfowitz Algorithm performs the update

![\label{KW:Alg}

\bm\theta_{t+1} = \bm\theta_t - \bm\alpha_t

\left[

\frac{f(\bm\theta_t+\bm\gamma_t,U_t)-f(\bm\theta_t-\bm\gamma_t,U_t)}{2\gamma_t}

\right]](https://appliedprobability.blog/wp-content/uploads/2022/10/d37b33d7277f8e2e3f2c7d9d356ee24f.png?w=840)

I.e. we replace the finite difference estimate of with the analogous random finite difference in

.

We need to choose and

so that we converge to the optimum.

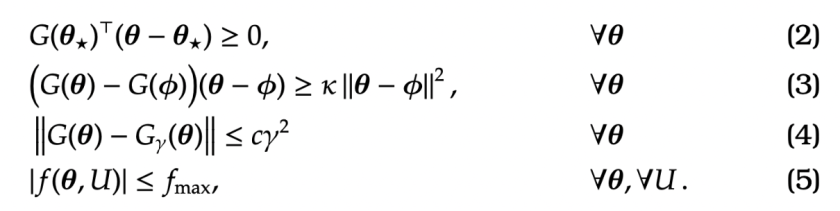

Assumptions

We make the following assumptions on . There exists

and there exists a constant

and

such that

Notice that if

Notice that if is strongly convex with its optimum at

then the two statements above follow.

We also place assumptions on the sequences and

specifically we assume that

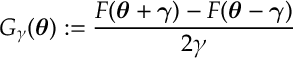

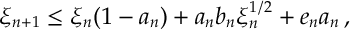

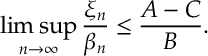

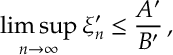

Main Result

THEOREM. For the Kiefer-Wolfowitz procedure , if (2-7) hold then

![\label{KW:thrm:eq1}

\limsup_{t\rightarrow\infty}

\frac{\mathbb E \left[\left\|

\bm\theta_t -\bm\theta_\star

\right\|^2

\right]}{\gamma_t^4 + \alpha_t / \gamma_t^2}

<\infty](https://appliedprobability.blog/wp-content/uploads/2022/10/180e0793495e7eb46d3d5780f93a3862.png?w=840)

Specifically if we take and

then

![\begin{aligned}

\label{KW:thrm:eq2}

\limsup_{t\rightarrow\infty} \frac{\mathbb E \left[\left\|

\bm\theta_t -\bm\theta_\star

\right\|^2\right] }{t^{2/3}} \leq \frac{1}{2} \left[

\frac{c^2}{\kappa^2} +\frac{f_{\max}^2}{4}

\right]

%\end{aligned}](https://appliedprobability.blog/wp-content/uploads/2022/10/5031c705dc5326f505cb16b36223d60c.png?w=840) The proof of Theorem relies on the following proposition.

The proof of Theorem relies on the following proposition.

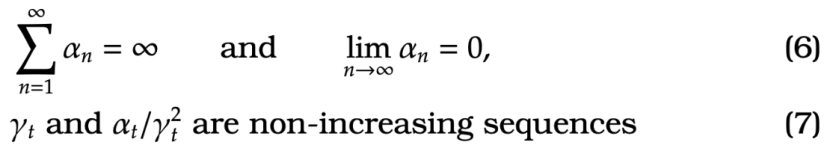

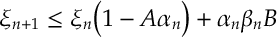

PROPOSITION. Given is a positive sequence,

and

are positive non-increasing sequences and

are such that

if

if

then

We prove this Proposition at the end of this section. Notice that we proved similar bounds to the above for Robbins-Monro.

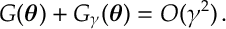

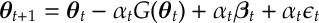

Proof of Theorem. We can rewrite the Kiefer-Wolfowitz recursion as

where ![\bm\beta_t = G(\bm\theta_t ) - G_{\gamma_t}(\bm\theta_t)

\quad

\text{and}

\quad

\epsilon_t

=

G_{\gamma_t} (\bm\theta_t)

-

\left[

\frac{

f(\bm\theta_t + \gamma_t , U_t)

-

f(\bm\theta_t - \gamma_t , U_t)}{2 \gamma_t}

\right] \,.](https://appliedprobability.blog/wp-content/uploads/2022/10/45d8e41e098036966b23a697e167a565.png?w=840) Setting

Setting

![z_t = \mathbb E \left[

\left\|

\bm\theta_t - \bm\theta_\star

\right\|^2

\right]\, ,](https://appliedprobability.blog/wp-content/uploads/2022/10/73a470be61f3d51f1b808011438e17e3.png?w=840)

notice that ![\begin{aligned}

z_{t+1}

=\, &

\mathbb E

\left[

\left\|

\bm\theta_{t+1} - \bm\theta_t + \bm\theta_t + \bm \theta_\star

\right\|^2

\right]

\notag

\\

\leq \,

&

\mathbb E

\left[

\left\|

\bm\theta_t - \bm\theta_\star

\right\|^2

\right]

+

2 \mathbb E \left[

\Big( \bm\theta_{t+1} - \bm\theta_t \Big)^\top \Big( \bm\theta_t - \bm\theta_\star \Big)

\right]

+

\mathbb E \left[

\left\|

\bm\theta_{t+1} - \bm\theta_t

\right\|^2

\right]

\notag

\\

=\,

&

\;\; z_t \notag

\\&+

2\mathbb E \left[

-\alpha_t G(\bm\theta_t)^\top (\bm\theta_t -\bm\theta_\star)

\right] \label{KW:e1}

\\&

+

2 \mathbb E \left[

\alpha_t \bm\beta_t (\bm\theta_t -\bm\theta_\star)

\right]

\label{KW:e2}

\\&

+

2 \mathbb E \left[

\alpha_t \epsilon_t ( \bm\theta_t - \bm\theta_\star)

\right]\label{KW:e3}

\\&+ \mathbb E \left[

\left\|

\bm\theta_{t+1} - \bm\theta_t

\right\|^2

\right]\label{KW:e4}\end{aligned}](https://appliedprobability.blog/wp-content/uploads/2022/10/da0769f408048ea2f711cc1f2b2a7e6f.png?w=840)

We now bound the four terms above.

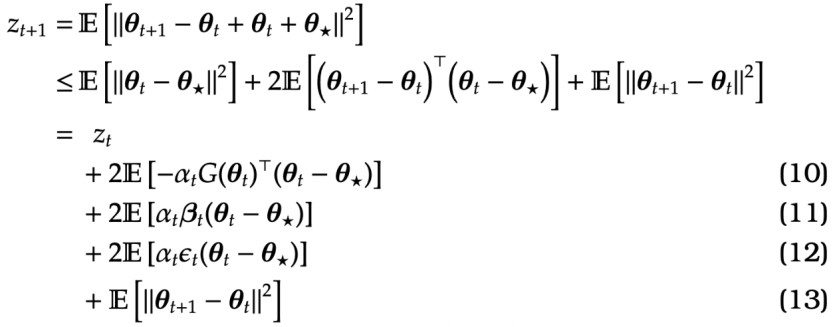

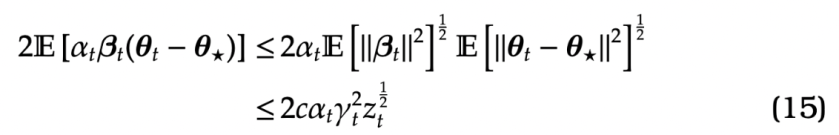

We can bound (10) as follows

![\begin{aligned}

&-2\alpha_t \mathbb E \left[

G(\bm\theta_t)^\top (\bm\theta_t-\bm\theta_\star)

\right]

\notag

\\

\leq \,

&

-2\alpha_t \mathbb E \left[

(G(\bm\theta_t) - G(\bm\theta_\star) )

(\bm\theta_t - \bm\theta_\star)

\right]

\tag{By Assumption \eqref{KW:As1}}

\\

\leq \,

&

-2\alpha_t \kappa \mathbb E\left[

\left\|

\bm\theta_t -\bm\theta_\star

\right\|

\right]

\tag{By Assumption \eqref{KW:As2}}

\\

=\,

&

-2 \kappa \alpha_t z_t \label{KW:eq1}\end{aligned}](https://appliedprobability.blog/wp-content/uploads/2022/10/a8819ff9ca83b260bd40e05c37a24864.png?w=840)

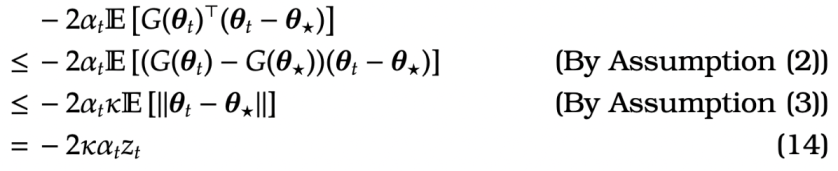

For the term (11), by Cauchey-Schwartz

In the final inequality above we note that from Assumption (4) that:

For term (12), we have ![\begin{aligned}

2\mathbb E [ \alpha_t \bm\epsilon_t^\top (\bm\theta_t -\bm\theta_\star )] = 0

%\end{aligned}](https://appliedprobability.blog/wp-content/uploads/2022/10/efb2c6c87cef6f4d26c8fa986aa6cb8f.png?w=840)

because .

For term (13)

Applying (14), (15), (16) and (17) to (10), (11) , (12) and (13) gives

By the proposition above with ,

and

we see that

From this we see that the required result holds.

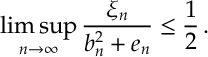

For and

, we have

![\limsup_{t\rightarrow\infty} \frac{z_t}{t^{2/3}} \leq \frac{1}{2} \left[

\frac{c^2}{\kappa^2} +\frac{f_{\max}^2}{4}

\right]](https://appliedprobability.blog/wp-content/uploads/2022/10/e12a9a4e773c0362180bba3cf9c737e9.png?w=840)

which gives the final required expression. QED.

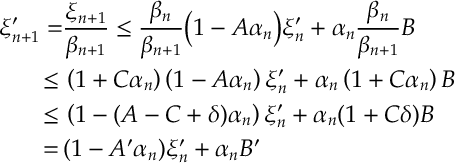

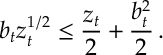

We now prove Proposition. We do so by proving Lemmas 1 and 2.

LEMMA 1. If is a positive sequence such that

and

then

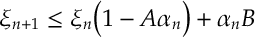

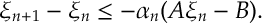

PROOF. Rearranging gives

If for some

then

![\xi_{n+1} -\xi_n \leq -\alpha_n ( A \xi_n -B)

\leq -\alpha_n ( A [B/A + \epsilon] -B)

= -\alpha_n A \epsilon\, .](https://appliedprobability.blog/wp-content/uploads/2022/10/2261c4e13c8a645292d071d245a2d21a.png?w=840)

So is decreasing when

holds and, since

, there exists

s.t.

. Let

be the first value of

where

occurs.

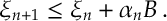

Notice, can only increase when

, and since

is a positive then

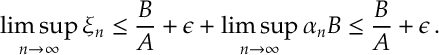

Thus, we see that

Therefore

Since is arbitrary the results holds. QED.

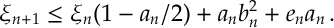

LEMMA 2. If is a positive sequence such that

and

with then

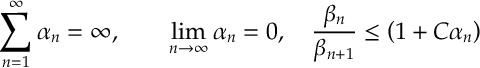

PROOF. Since , take

such that

for all

.

Now defining for

gives

where we define and

. Applying Lemma 1 gives

which recalling the definitions of ,

,

and recalling that

is arbitrary gives the result. QED.

Proof of Proposition. By the inequality

we have

Now the results follow by applying Lemma 2. With ,

,

and

. (Notice we can take

because

and

are decreasing.) QED.

References

The Kiefer-Wolfowitz procedure was first proposed by Kiefer and Wolfowitz (1952). Finite time bound similar to those in the Theorem above are given by Broadie et al. (2011). The analysis here largely follows from the arguments in Fabian (1967). Fabian and Broadie et al. both use results from Chung (1954), which is the basis for Lemmas 1 and 2 above.

Kiefer, J., & Wolfowitz, J. (1952). Stochastic estimation of the maximum of a regression function. The Annals of Mathematical Statistics, 462-466.

Broadie, M., Cicek, D., & Zeevi, A. (2011). General bounds and finite-time improvement for the Kiefer-Wolfowitz stochastic approximation algorithm. Operations Research, 59(5), 1211-1224.

Fabian, V. (1967). Stochastic approximation of minima with improved asymptotic speed. The Annals of Mathematical Statistics, 191-200.

Chung, K. L. (1954). On a stochastic approximation method. The Annals of Mathematical Statistics, 463-483.