Let’s explain why the normal distribution is so important.

(This is a section in the notes here.)

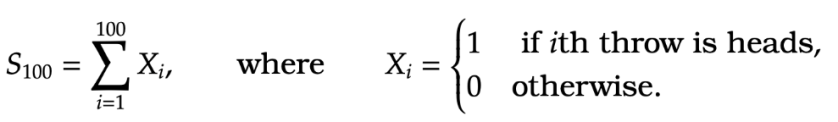

Suppose that I throw a coin times and count the number of heads

The proportion of heads should be close to its mean ![\begin{aligned}

\frac{S_{100}}{100} \approx \frac{1}{2} = \mathbb E[X] \,

%\end{aligned}](https://appliedprobability.blog/wp-content/uploads/2021/11/cc65afd534cdba7fc4edc7d7e769b9db.png?w=840) and for

and for it should be even closer. This can be shown mathematically (not just for coin throws but for quite general random variables)

Theorem [Weak Law of Large Numbers] For independent random variables ,

, with mean

and variance bounded above by

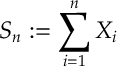

, if we define

then for all

![\begin{aligned}

\mathbb P\bigg( \mu - \epsilon \leq \frac{S_n}{n} \leq \mu + \epsilon \bigg)

\xrightarrow[n\rightarrow \infty ]{} 1 \, .\end{aligned}](https://appliedprobability.blog/wp-content/uploads/2021/11/c547fcffd9804bd685fce5f38d7d4a33.png?w=840)

We will prove this result a little later. But, continuing the discussion, suppose are independent identically distributed random variables with mean

and variance

. We see from the above result that

is getting close to

. Nonetheless, in general, there is going to be some error. So let’s define

So what does

So what does look like? We know that, in some sense,

as

but how fast?

For this we can analyze the variance of the random variable :

Thus the standard deviation of decreases as

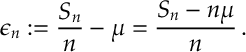

. Given this we can define

Notice that and

So has mean zero and its variance is fixed. I.e. the error as measured by

is not vanishing, but is staying roughly constant. So it seems like there is sometime happening for this random variable

, a question is what happens to

. The answer is that

converges to a normal distribution.

This is a famous and fundamental result in probability and statistics called the central limit theorem.

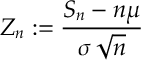

Theorem [Central Limit Theorem] For independent random variables with mean

and variance

, for

and

then

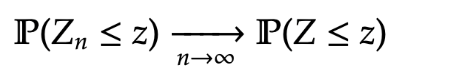

then ![\begin{aligned}

\mathbb P (Z_n \leq z ) \xrightarrow[n \rightarrow \infty ]{} \mathbb P ( Z \leq z)

%\end{aligned}](https://appliedprobability.blog/wp-content/uploads/2021/11/e681ef5920e13417fc863fa019aa1f18.png?w=840)

where is a standard normal random variable.

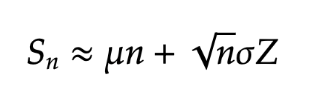

Given the discussion above the Central Limit Theorem, roughly says that

where is a standard normal random variable. So whenever we measure errors about some expected value we should start to consider normal random variables.