Kalman filtering (and filtering in general) considers the following setting: we have a sequence of states , which evolves under random perturbations over time. Unfortunately we cannot observe

, we can only observe some noisy function of

, namely,

. Our task is to find the best estimate of

given our observations of

.

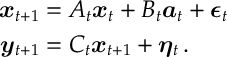

Consider the equations

where ,

and

and

are independent. (We let

be the sub-matrix of the covariance matrix corresponding to

and so forth…)

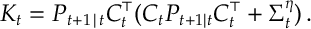

The Kalman filter has two update stages: a prediction update and a measurement update. These are

and

and

where

The matrix is often referred to as the Kalman Gain. Assuming the initial state

is known and deterministic

in the above.

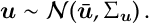

We will use the following proposition, which is a standard result on normally distributed random vectors, variances and covariances,

Prop 1. Let be normally distributed vector with mean

and covariance

, i.e.

i) For any matrix and (constant) vector

, we have that

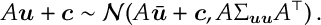

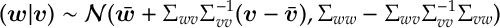

ii) If we take then

conditional on

gives

iii) ,

.

We can justify the Kalman filtering steps by proving that the conditional distribution of is given by the Prediction and measurement steps. Specifically we have the following.

Theorem 1.

![\begin{aligned} (\bm x_{t+1} | \bm y_{[0:t]}, \bm a_{[0:t]} ) & \sim \mathcal N ( \hat{\bm x}_{t+1|t } , P_{t+1|t} ) \\ (\bm x_{t+1} | \bm y_{[0:t+1]}, \bm a_{[0:t]} ) & \sim \mathcal N ( \hat{\bm x}_{t+1|t+1 } , P_{t+1|t+1} )\end{aligned}](https://appliedprobability.blog/wp-content/uploads/2019/05/bd9e42299831d3e5c803888a90c94464.png?w=840)

where and

.

Proof. We show the result by induction supposing that

![(\bm x_{t} | \bm y_{[0:t]}, \bm a_{[0:t]} ) \sim \mathcal N ( \hat{\bm x}_{t|t } , P_{t|t} )\, .](https://appliedprobability.blog/wp-content/uploads/2019/05/545654424b1f932b8453b816ddba47be.png?w=840)

Since is a linear function of

, we have that

![(\bm x_{t+1} | \bm y_{[0:t]}, \bm a_{[0:t]} ) \sim \mathcal N ( \hat{\bm x}_{t+1|t } , P_{t+1|t} )\, .](https://appliedprobability.blog/wp-content/uploads/2019/05/557108bb491fb2adb9ce1e8ca994a6d5.png?w=840)

where, by Prop 1ii), we have that

Given , we have by Prop 1iii) that

and

. Thus

![\begin{aligned} ( [\bm x_{t+1} , \bm y_{t+1}] | \bm y_{[0:t]} , \bm a_{[0:t]} ) \sim \mathcal N \left( [ \hat{\bm x}_{t+1|t } , C_t \hat{\bm x}_{t+1|t } ] , \left[ \begin{array}{ll} P_{t+1|t} & P_{t+1} C^\top_t \\ C_t P_{t+1|t} & C_t P_{t+1} C_t^\top + \Sigma^\eta_t \end{array} \right) \right]\, .\end{aligned}](https://appliedprobability.blog/wp-content/uploads/2019/05/fae268000beba9b6ad4524937f93c6c9.png?w=840)

Thus applying Prop 1ii), we get that

![\begin{aligned} (x_{t+1} | y_{[0:t+1]} , a_{[0:t]} ) & = ( (x_{t+1} | y_{[0:t]} , a_{[0:t]} ) | y_{t+1}) \\ & \sim \mathcal N \Big( \hat{\bm x}_{t+1|t} + P_{t+1 | t} C^\top_t [ C_t P_{t+1} C^\top_t + \Sigma^\eta_t ]^{-1} ( y_{t+1} - C_t \hat{\bm x}_{t+1|t} ) \, ,\\ & \qquad\qquad \qquad\quad P_{t+1|t} - P_{t+1|t} C^\top_t [ C_t P_{t+1|t} C_t^\top + \Sigma^\eta_t ]^{-1} C_t P_{t+1|t} \Big)\, . \end{aligned}](https://appliedprobability.blog/wp-content/uploads/2019/05/0fec6dd2f7c25aae58eee3bc57bd243a.png?w=840)

$ \square$

Literature

The Kalman filter is generally credited to Kalman and Bucy. The method is now standard in many text books on control and machine learning.

Kalman, Rudolph E., and Richard S. Bucy. “New results in linear filtering and prediction theory.” (1961): 95-108.