- Continuous-time dynamic programs

- The HJB equation; a heuristic derivation; and proof of optimality.

Discrete time Dynamic Programming was given in previously (see Dynamic Programming ). We now consider the continuous time analogue.

Time is continuous ;

is the state at time

;

is the action at time

;

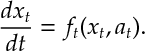

Def 1 [Plant Equation] Given function , the state evolves according to a differential equation

This is called the Plant Equation.

Def 2 A policy chooses an action

at each time

. The (instantaneous) reward for taking action

in state

at time

is

and

is the reward for terminating in state

at time

.

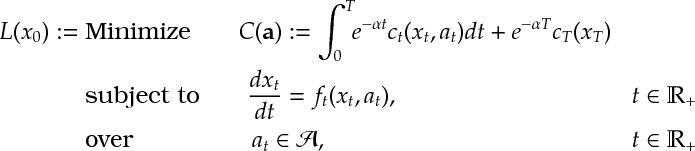

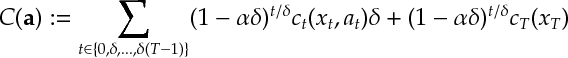

Def 3 [Continuous Dynamic Program] Given initial state , a dynamic program is the optimization

Further, let (Resp.

) be the objective (Resp. optimal objective) for when the summation is started from

, rather than

.

When a minimization problem where we minimize loss given the costs incurred is replaced with a maximization problem where we maximize winnings given the rewards received. The functions ,

and

are replaced with notation

,

and

.

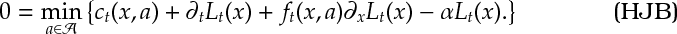

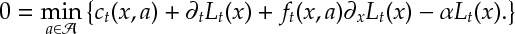

Def 4 [Hamilton-Jacobi-Bellman Equation] For a continuous-time dynamic program , the equation

is called the Hamilton-Jacobi-Bellman equation. It is the continuous time analogoue of the Bellman equation [[DP:Bellman]].

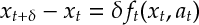

Ex 1 [A Heuristic derivation of the HJB equation] Argue that, for small,

satisfying the recursion

is a good approximation to the plant equation . (A heuristic argument will suffice)

Ex 2 [Continued] Argue (heuristically) that following is a good approximation for the objective of a continuous time dynamic program is

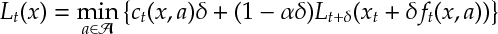

Ex 3 [Continued]Show that the Bellman equation for the discrete time dynamic program with objective and plant equation is

Ex 4 [Continued]Argue, by letting approach zero, that the above Bellman equation approaches the equation

Ex 5 [Optimality of HJB]Suppose that a policy has a value function

that satisfies the HJB-equation for all

and

then, show that

is an optimal policy.

(Hint: consider where

are the states another policy

.)

Answers

Ans 1 Obvious from definition of derivative.

Ans 2 Obvious from definition of (Riemann) Integral and since as

.

Ans 3 Immediate from discrete time Bellman Equation.

Ans 4 Minus from each side in [3] divide by

and let

. Further note that

![\frac{(1-\alpha \delta) L_{t+\delta}(x+\delta f) - L_t(x)}{\delta} \xrightarrow[\delta \rightarrow 0]{ }\partial_t L_t(x) + f_t(x,a)\partial_x L_t(x) - \alpha L_t(x).](https://appliedprobability.blog/wp-content/uploads/2017/04/9f0e0990dfcb5423a08c64d4048fe3d2.png?w=840)

Ans 5 Using shorthand :

![\begin{aligned} -\frac{d}{dt} \left(e^{-\alpha t} C_t(\tilde{x}_t,\Pi)\right)&=e^{-\alpha t} \left\{ c_t(\tilde{x}_t,\tilde{\pi}_t) - \left[ c_t(\tilde{x}_t,\tilde{\pi}_t) - \alpha C + f_t(\tilde{x}_t,\tilde{\pi}_t) \partial_x C + \partial_t C\right]\right\}\\ &\leq e^{-\alpha t} c_t(\tilde{x}_t,\tilde{\pi}_t) \end{aligned}](https://appliedprobability.blog/wp-content/uploads/2017/04/14ae2cec35d827c74ddad24bf8f4cff8.png?w=840)

The inequality holds since the term in the square brackets is the objective of the HJB equation, which is not maximized by .